本节讲述用tensorflow2实现线性回归。

算法讲解及基础实现见【吴恩达机器学习——线性回归】

首先导包:

import tensorflow as tf print(tf.__version__) from matplotlib import pyplot as plt import random

输出:

2.1.0

然后我们生成数据,首先模拟一个真实参数w和b,然后随机生成一些数据通过参数生成数据,然后加一点混淆。

这里我们选用2个特征,生成1000个数据,w分别为2和-3.4,b为4.2。

num_inputs = 2 num_examples = 1000 true_w = [2,-3.4] true_b = 4.2 # 生成标准差为1的输入数据 features = tf.random.normal((num_examples,num_inputs),stddev=1) labels = true_w[0]*features[:,0]+true_w[1]*features[:,1]+true_b # 随机加上标准差为0.01的噪音 labels += tf.random.normal(labels.shape,stddev=0.01)

然后我们输出一下特征和标签:

print(features[0],labels[0])

输出:

tf.Tensor([ 0.60977983 -0.21984003], shape=(2,), dtype=float32) tf.Tensor(6.182882, shape=(), dtype=float32)

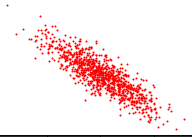

画出散列点图:

def set_figsize(figsize=(3.5,2.5)):

plt.rcParams['figure.figsize'] = figsize

set_figsize()

plt.scatter(features[:,1],labels,c='r',s=1)

对数据进行分批,每次返回一个批大小的数据,在分割之前要进行打乱,其中tf.gather是根据indices来对原始数据进行切片:

def data_iter(batch_size,features,labels):

num_examples = len(features)

indices = list(range(num_examples))

random.shuffle(indices)

for i in range(0,num_examples,batch_size):

j = indices[i:min(i+batch_size,num_examples)]

# gather 根据indices切片

yield tf.gather(features,axis=0,indices=j),tf.gather(labels,axis=0,indices=j)

我们输出试验一下:

batch_size = 64

for X,y in data_iter(batch_size,features,labels):

print(X,y)

break

输出64个数据:

tf.Tensor( [[-1.568652 1.1892316 ] [ 0.02704381 -1.2114034 ] [ 0.54140687 0.6874146 ] [-0.3238443 -0.7069099 ] [ 0.670013 1.0555037 ] [ 1.4785416 -0.02862001] [-1.5540386 -0.90648144] [-2.657925 -0.34048915] [-0.13981636 0.45385957] [-0.0776669 -0.02562523] [ 0.15774341 1.3849018 ] [ 0.9904404 0.49320254] [-0.5353007 1.9172873 ] [-0.7673046 1.2029607 ] [ 1.9484698 -0.25718805] [-1.5775664 0.23291822] [ 1.2565771 -0.39332908] [ 0.5235608 -0.60813963] [ 0.2633382 -1.6159766 ] [ 1.8978524 1.3576044 ] [-0.40452728 0.02824024] [-0.6108405 0.1997418 ] [ 0.28169724 -1.2480029 ] [ 1.1958692 2.5761182 ] [-0.01832403 0.2854444 ] [ 0.02692759 -0.79011744] [ 1.9638565 0.14134032] [-0.53969157 -1.1126633 ] [ 1.3907293 -1.3411927 ] [-1.2987695 1.61959 ] [-2.0938413 -0.20309015] [-0.9750384 0.8229153 ] [-1.7626753 0.4005635 ] [ 0.22338685 -0.72602 ] [-0.21097755 -0.3210915 ] [-1.3122041 2.3579533 ] [-0.3418174 -1.2656993 ] [-1.5983564 -0.02388086] [-1.0887327 -0.6083594 ] [ 0.16343872 -1.0361065 ] [ 0.4365194 1.3847867 ] [ 0.2617833 0.9032589 ] [ 0.5480683 1.3676795 ] [-0.73368275 2.49532 ] [-1.5379615 0.891124 ] [ 0.20122519 -0.00788717] [ 0.5612905 -0.9636846 ] [-0.08743163 0.31147835] [ 1.6043682 0.913555 ] [-1.4654535 -0.648391 ] [-0.45734385 -2.094514 ] [-0.05061309 0.68695724] [-0.05295819 0.1176275 ] [ 0.5119573 0.04384377] [-0.88304853 -0.26165497] [-0.39841247 0.13567868] [ 1.6472635 1.2322218 ] [-0.1735344 0.5267474 ] [-0.8581472 0.30082825] [ 0.45566842 -0.07357331] [-0.747671 -0.27977103] [-0.40926522 -1.5160431 ] [ 1.0966959 1.6514026 ] [-0.4333734 0.10984586]], shape=(64, 2), dtype=float32) tf.Tensor( [-2.9873667 8.377133 2.946247 5.9545803 1.9532868 7.249343 4.1806116 0.03477701 2.3633275 4.1365757 -0.18981844 4.5226593 -3.3917785 -1.4252613 8.998332 0.23443112 8.046925 7.302883 10.212007 3.4053535 3.2838557 2.3075964 8.993652 -2.153344 3.205419 6.938238 7.631661 6.9013247 11.54775 -3.912147 0.70706934 -0.5570358 -0.70196784 7.121228 4.8730345 -6.4330792 7.812588 1.0836437 4.1145034 8.063896 0.35680652 1.6736523 0.64509416 -5.7431006 -1.9141186 4.6364574 8.593288 2.9628947 4.298334 3.4748955 10.410857 1.7619439 3.6892602 5.0799007 3.3337908 2.9197962 3.3119173 2.0573657 1.463488 5.371661 3.6591358 8.535518 0.78479844 2.942409 ], shape=(64,), dtype=float32)

然后我们初始化我们的参数,就是w和b

# 初始化参数

w = tf.Variable(tf.random.normal((num_inputs,1),stddev=0.01))

b = tf.Variable(tf.zeros((1,)))

定义线性传播方法:

def linreg(X,w,b):

return tf.matmul(X,w)+b

代价计算方法:

def squared_loss(y_hat,y):

return (y_hat-tf.reshape(y,y_hat.shape))**2/2

更新参数方法:

def sgd(params,lr,batch_size,grads):

for i,param in enumerate(params):

param.assign_sub(lr*grads[i]/batch_size)

然后对所有数据进行3次计算,即epochs=3:

lr = 0.03

num_epochs = 3

net = linreg

loss = squared_loss

for epoch in range(num_epochs):

for X,y in data_iter(batch_size,features,labels):

with tf.GradientTape() as t:

t.watch([w,b])

# 定义损失函数

l = loss(net(X,w,b),y)

grads = t.gradient(l,[w,b])

sgd([w,b],lr,batch_size,grads)

train_l = loss(net(features,w,b),labels)

print('epoch %d, loss %f'.format(epoch+1,tf.reduce_mean(train_l)))

最后输出结果:

print(true_w,w)

print(true_b,b)

输出,可以看到2和-3.4分别拟合到了1.6和-2.6,4.2拟合到了3.11:

[2, -3.4] <tf.Variable 'Variable:0' shape=(2, 1) dtype=float32, numpy=

array([[ 1.6446801],

[-2.5876393]], dtype=float32)>

4.2 <tf.Variable 'Variable:0' shape=(1,) dtype=float32, numpy=array([3.159864], dtype=float32)>

下面是一个更加精简的实现过程,同时效果也更好,使用了tensorflow的高级封装——keras。

和之前一样,先导包:

import tensorflow as tf print(tf.__version__)

输出:

2.1.0

然后生成数据:

num_input = 2 num_example = 1000 true_w = [2,-3.4] true_b = 4.2 features = tf.random.normal(shape=(num_example,num_input),stddev=1) labels = true_w[0] * features[:,0]+true_w[1]*features[:,1]+true_b labels += tf.random.normal(labels.shape,stddev=0.01)

这里我们和之前不一样,我们使用它tensorflow的dataset对数据进行打包:

from tensorflow import data as tddata batch_size = 10 # 将训练数据的特征和标签组合 dataset = tddata.Dataset.from_tensor_slices((features,labels)) # 随机读取小批量 dataset = dataset.shuffle(buffer_size=num_example) dataset = dataset.batch(batch_size) data_iter = iter(dataset)

输出一下数据:

for (batch,(X, y)) in enumerate(dataset):

print(batch,X,y)

break

输出如下:

0 tf.Tensor( [[-1.3183944 0.88571453] [ 0.5582473 0.6283028 ] [ 0.3318851 0.30217335] [ 0.5585688 1.625666 ] [ 1.3739443 -0.2193088 ] [-1.1692793 -1.3866609 ] [ 0.76365966 1.0310326 ] [-1.7447253 1.4246174 ] [-0.4479057 -0.52858967] [ 0.6691452 -0.44063178]], shape=(10, 2), dtype=float32) tf.Tensor( [-1.4440066 3.1915112 3.8415916 -0.20795591 7.6803966 6.574926 2.2208211 -4.1473265 5.0976176 7.0480514 ], shape=(10,), dty

然后是网络结构设置与网络参数初始化:

from tensorflow import keras from tensorflow import initializers as init model = keras.Sequential() model.add(keras.layers.Dense(1,kernel_initializer=init.RandomNormal(stddev=0.01)))

损失函数定义:

from tensorflow import losses loss = losses.MeanSquaredError()

参数更新方法定义:

from tensorflow import optimizers trainer = optimizers.SGD(learning_rate=0.03)

训练过程:

9

num_epochs = 3

for epoch in range(1,num_epochs+1):

for (batch,(X,y)) in enumerate(dataset):

with tf.GradientTape() as t:

l = loss(model(X,training=True),y)

grads = t.gradient(l, model.trainable_variables)

trainer.apply_gradients(zip(grads,model.trainable_variables))

l = loss(model(features),labels)

print('epoch %d, loss: %f' % (epoch, l))

打印一下结果,发现相比上一个版本,具有更好的拟合(因为使用的是内置方法):

true_w,model.get_weights()[0]

([2, -3.4],

array([[ 2.0009027],

[-3.4000764]], dtype=float32))

true_b,model.get_weights()[1]

(4.2, array([4.2001143], dtype=float32))

0 条评论